Malliavin calculus

The Malliavin calculus, named after Paul Malliavin, extends the calculus of variations from functions to stochastic processes. The Malliavin calculus is also called the stochastic calculus of variations. In particular, it allows the computation of derivatives of random variables.

Malliavin invented his calculus to provide a stochastic proof that Hörmander's condition implies the existence of a density for the solution of a stochastic differential equation; Hörmander's original proof was based on the theory of partial differential equations. His calculus enabled Malliavin to prove regularity bounds for the solution's density. The calculus has been applied to stochastic partial differential equations.

The calculus allows integration by parts with random variables; this operation is used in mathematical finance to compute the sensitivities of financial derivatives. The calculus has applications for example in stochastic filtering.

Contents |

Overview and history

Paul Malliavin's stochastic calculus of variations extends the calculus of variations from functions to stochastic processes. In particular, it allows the computation of derivatives of random variables.

Malliavin invented his calculus to provide a stochastic proof that Hörmander's condition implies the existence of a density for the solution of a stochastic differential equation; Hörmander's original proof was based on the theory of partial differential equations. His calculus enabled Malliavin to prove regularity bounds for the solution's density. The calculus has been applied to stochastic partial differential equations.

Invariance principle

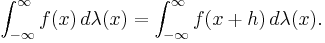

The usual invariance principle for Lebesgue integration over the whole real line is that, for any real number h and integrable function f, the following holds

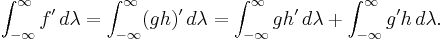

This can be used to derive the integration by parts formula since, setting f = gh and differentiating with respect to h on both sides, it implies

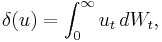

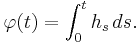

A similar idea can be applied in stochastic analysis for the differentiation along a Cameron-Martin-Girsanov direction. Indeed, let  be a square-integrable predictable process and set

be a square-integrable predictable process and set

If  is a Wiener process, the Girsanov theorem then yields the following analogue of the invariance principle:

is a Wiener process, the Girsanov theorem then yields the following analogue of the invariance principle:

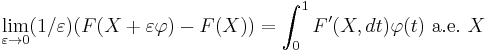

Differentiating with respect to ε on both sides and evaluating at ε=0, one obtains the following integration by parts formula:

Here, the left-hand side is the Malliavin derivative of the random variable  in the direction

in the direction  and the integral appearing on the right hand side should be interpreted as an Itô integral. This expression remains true (by definition) also if

and the integral appearing on the right hand side should be interpreted as an Itô integral. This expression remains true (by definition) also if  is not adapted, provided that the right hand side is interpreted as a Skorokhod integral.

is not adapted, provided that the right hand side is interpreted as a Skorokhod integral.

Clark-Ocone formula

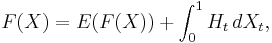

One of the most useful results from Malliavin calculus is the Clark-Ocone theorem, which allows the process in the martingale representation theorem to be identified explicitly. A simplified version of this theorem is as follows:

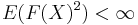

For ![F: C[0,1] \to \R](/2012-wikipedia_en_all_nopic_01_2012/I/1ee24a4aab430be3208a1634d90f27f5.png) satisfying

satisfying  which is Lipschitz and such that F has a strong derivative kernel, in the sense that for

which is Lipschitz and such that F has a strong derivative kernel, in the sense that for  in C[0,1]

in C[0,1]

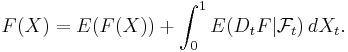

then

where H is the previsible projection of F'(x, (t,1]) which may be viewed as the derivative of the function F with respect to a suitable parallel shift of the process X over the portion (t,1] of its domain.

This may be more concisely expressed by

Much of the work in the formal development of the Malliavin calculus involves extending this result to the largest possible class of functionals F by replacing the derivative kernel used above by the "Malliavin derivative" denoted  in the above statement of the result.

in the above statement of the result.

Skorokhod integral

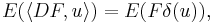

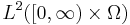

The Skorokhod integral operator which is conventionally denoted δ is defined as the adjoint of the Malliavin derivative thus for u in the domain of the operator which is a subset of  , for F in the domain of the Malliavin derivative, we require

, for F in the domain of the Malliavin derivative, we require

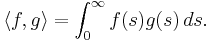

where the inner product is that on  viz

viz

The existence of this adjoint follows from the Riesz representation theorem for linear operators on Hilbert spaces.

It can be shown that if u is adapted then

where the integral is to be understood in the Itô sense. Thus this provides a method of extending the Itô integral to non adapted integrands.

Applications

The calculus allows integration by parts with random variables; this operation is used in mathematical finance to compute the sensitivities of financial derivatives. The calculus has applications for example in stochastic filtering.

References

- Kusuoka, S. and Stroock, D. (1981) "Applications of Malliavin Calculus I", Stochastic Analysis, Proceedings Taniguchi International Symposium Katata and Kyoto 1982, pp 271-306

- Kusuoka, S. and Stroock, D. (1985) "Applications of Malliavin Calculus II", J. Faculty Sci. Uni. Tokyo Sect. 1A Math., 32 pp 1-76

- Kusuoka, S. and Stroock, D. (1987) "Applications of Malliavin Calculus III", J. Faculty Sci. Univ. Tokyo Sect. 1A Math., 34 pp 391-442

- Malliavin, Paul and Thalmaier, Anton. Stochastic Calculus of Variations in Mathematical Finance, Springer 2005, ISBN 3-540-43431-3

- Nualart, David (2006). The Malliavin calculus and related topics (Second edition ed.). Springer-Verlag. ISBN 978-3-540-28328-7.

- Bell, Denis. (2007) The Malliavin Calculus, Dover. ISBN 0486449947

- Schiller, Alex (2009) Malliavin Calculus for Monte Carlo Simulation with Financial Applications. Thesis, Department of Mathematics, Princeton University

- Øksendal, Bernt K..(1997) An Introduction To Malliavin Calculus With Applications To Economics. Thesis, Dept. of Mathematics, University of Oslo (Zip file containing Thesis and addendum)

- Di Nunno, Giulia, Øksendal, Bernt, Proske, Frank (2009) "Malliavin Calculus for Lévy Processes with Applications to Finance", Universitext, Springer. ISBN 978-3-540-78571-2

External links

- Friz, Peter K. (2005-04-10). "An Introduction to Malliavin Calculus" (PDF). Archived from the original on 2007-04-17. http://web.archive.org/web/20070417205303/http://www.statslab.cam.ac.uk/~peter/malliavin/Malliavin2005/mall.pdf. Retrieved 2007-07-23. Lecture Notes, 43 pages

![E(F(X %2B \varepsilon\varphi))= E \left [F(X) \exp \left ( \varepsilon\int_0^1 h_s\, d X_s -

\frac{1}{2}\varepsilon^2 \int_0^1 h_s^2\, ds \right ) \right ].](/2012-wikipedia_en_all_nopic_01_2012/I/668f6aada4707100bb24b6ea5134bdab.png)

![E(\langle DF(X), \varphi\rangle) = E\Bigl[ F(X) \int_0^1 h_s\, dX_s\Bigr].](/2012-wikipedia_en_all_nopic_01_2012/I/dc0d352f39ef6148727b1d0757bc0201.png)